Introduction

Truth in the Age of AI: Top Tools for Detecting Fake Images and Content. With our hyper-connected digital landscape, where one piece of information is created, shared, and reshared many times over, the need for authenticity is greater than ever. Artificial intelligence (AI) is rapidly evolving, allowing the production of hyper-realistic images, deep fake videos, and auto-generated text that make it hard to tell what human-created content is and what AI-generated content is. The risk of misinformation, deception, and unintended ethical misuse will only increase as the line between real and fake blurs.

To address this issue, a variety of new AI-based detection and analysis tools are becoming available. These tools aim to help users assess the origins, trustworthiness, and veracity of visual and text content to help maintain digital integrity. In this article, we will look at the context of a suite of breakthrough tools, Nuanced, Circle to Search, HealthKey, Extracta.ai, EyePop.ai, and Content Credentials, that will take a key position in this important space. We will break down their attributes, examples of real use, ethical implications, and what they mean collectively to our future as we interact with content in a digital context.

Truth in the Age of AI: Top Tools for Detecting Fake Images and Content

Nuanced: AI for Authenticity Detection

Nuanced is at the leading edge of detecting AI-generated content. Nuanced’s algorithms can detect synthetic images and altered media using a complex pattern recognition framework and can detect any content embedded in a media file.

Key Features:

- Detection for AI-generated images leveraging deep learning

- Scanning metadata, reviewing generative fingerprints

- A real-time interface for single or bulk image verification

- Forensic style breakdown of images that can enumerate traces of manipulation.

Applications:

- Journalism: Allowing for the veracity of visual evidence before public reporting

- Academia: Detection of segments and passages of material in reports that have been AI-written

- Cybersecurity: Protecting from visual disinformation and phishing attacks

- Digital Forensic work: Investigate criminal activity in the process of documenting media manipulation and misrepresentation.

Impact: Given the political context (i.e., deepfake) Nuanced is important in assessing public discourse and establishing credibility when emerging technology threatens to influence ideas. It is increasingly being adopted by investigative organisations, news, and educational institutions. Given ongoing updates based on the pacing of AI models, Nuanced will be here to stay.

Circle to Search: An Interactive Search Engine Using AI.

Circle to Search is an interactive search engine with AI at its core and user-friendliness at the forefront. It takes selected text and, based on contextual parsing, uses the text to spin off search queries that produce AI-enhanced results with validation speed and accuracy.

Key Features:

- A Chrome App with a simple interface

- AI-driven summaries of linked sources

- Filter searches by publication date, domain authority, and AI score

- Preview search returns with citations-style annotations.

Applications:

- Education: assist students with identifying credible sources.

- Research: assist writers and analysts with timely validation.

- Journalism: assist writers with quickly checking background information and validating sources.

Ethical and educational uses: Circle to Search is not just another productivity tool; it is a tool that can be used in the educational sense to promote media literacy because it gets us to think about how as users we need to question our media sources while maintaining an awareness of the context in which we operate within. This awareness is essential in our post-fact and rapidly misinformation world.

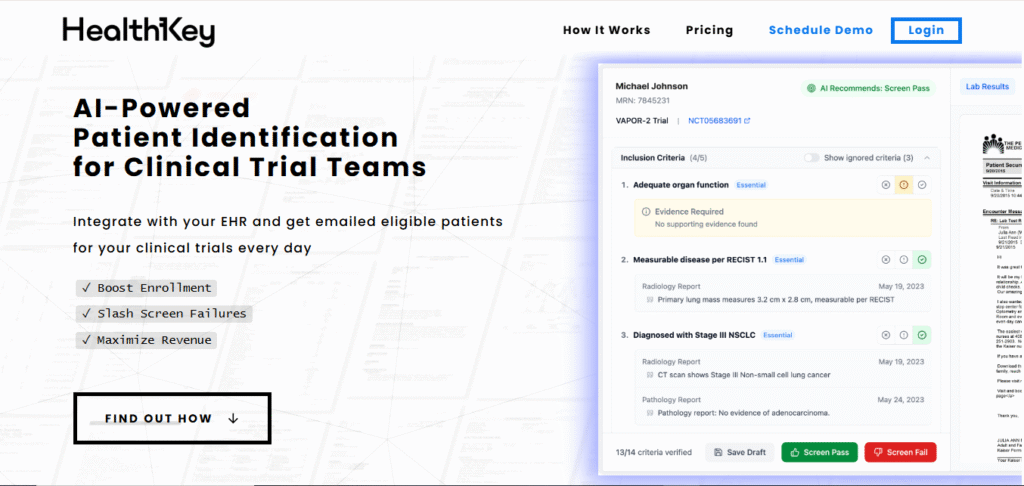

HealthKey: AI for Medical Summarization

HealthKey is transforming healthcare workflows by condensing enormous amounts of medical data into human-readable, actionable reports. Users (clinicians, insurers, and patients) will receive an intelligible report in less than 90 seconds using a conversational AI with the capability of summarizing an extremely large amount of medical data into simple summaries.

Key Features:

- Natural Language Processing (NLP) with a focus on medical semantics

- EHR (Electronic Health Record) integration

- Averages around 90 seconds to process per patient summary

- Data security with HIPAA and GDPR compliance.

Applications:

- Hospitals: Patient triage and physician briefings

- Insurers: Efficiently accelerate the claims processing and audit.

- Telemedicine: supports identifying clinical issues by rapidly summarising a patient’s medical report.

- Pharmaceutical research: support the summarising of patient reports of ongoing trials.

Intrusive, Ethical…HealthKey is attempting to gain efficiencies, but the AI still has an ethical burden regarding consent, bias, and misuse of data. Ongoing audits and explainability are required to provide rationalization for trustworthiness.

Extracta.ai: Extract structured data from documents

Use deep learning to extract structured data from unstructured documents. Extracta.ai enables you to extract data effectively and even understand the entire context, hierarchy, and intent of the documents in a much more detailed way than just extracting text using Optical Character Recognition (OCR).

Key Features:

- Upload via drag and drop, and process

- Intelligent field recognition and mapping

- Export to Excel, PDF, or API endpoints

- Always learning with custom learning datasets

Applications:

- Legal: Review contracts and extract clauses

- Finance: processing purchase orders and invoices, and amounts

- Human Resources: onboarding documents and payroll forms

- Supply Chain: Purchase order management

Transformative Potential: Extracta.ai represents the potential to empower a radical change in document-focused workflows. The platform has the ability to transform document workflows into auto-pipe processes, reducing manual labor while maximizing the accuracy and regulatory compliance.

EyePop.ai is a No-Code image and video analytics platform.

A no-code platform, EyePop.ai, aims to allow anyone with a computer to explore analysing images and videos without needing to know how to program.

Some features include:

- Drag-and-drop program interface,

- Object and face recognition with awesome labelling capabilities,

- Detection of scene change, detection, and video segmentation,

- Emotional heatmaps for viewing how an audience divided their time between emotions.

Use Cases:

- Marketing teams: get data to support emotional analysis of ad engagement.

- Law enforcement analyzes imagery from images or surveillance footage of events in question.

- Content creators: Use EyePop to edit and verify imagery.

- Academic: students are employed to analyse the analytical outcomes of historical media and media ethics

Inclusivity in Tech: This all leads to promoting Accessibility in Technology and advocating for the benefits of use to users of all kinds, since EyePop is user-friendly for non-technical users. This approach is towards reducing the barriers of access to AI and better (and responsible AI), and advocates for an ecosystem that promotes responsible action with these tools.

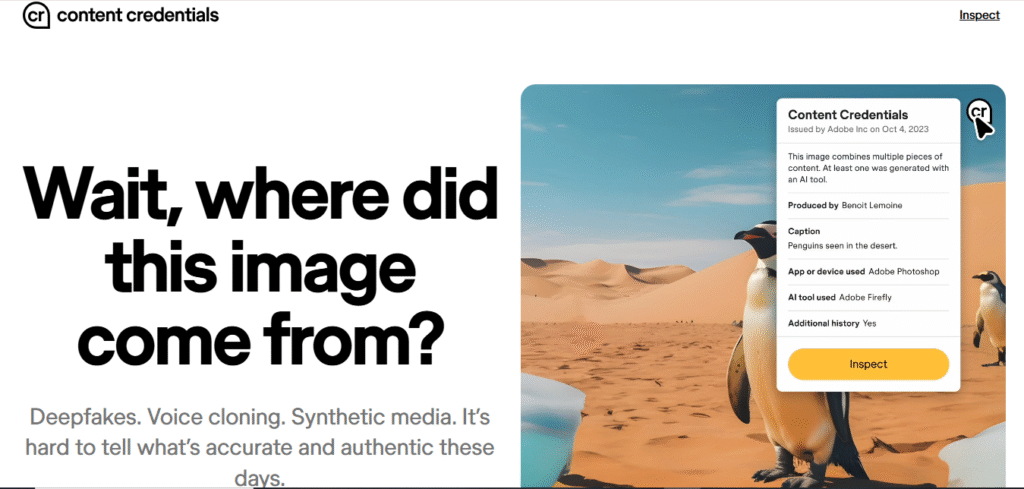

Content Credentials: Verifying Digital Provenance

As part of the Content Authenticity Initiative, Adobe’s Content Credentials help creators upload metadata to digital work to establish provenance, while discouraging theft or misinformation.

Key Features:

- Embedded origin and edit history

- Secure digital signatures and hashes.

- Blockchain-based logging creates tamper-proof records.

- Integration with Adobe Photoshop, Illustrator, and other applications.

Applications:

- Photojournalism: Signaling when a captured moment is authentic

- Digital Art: Claiming ownership and tracing uses of digital art.

- Brand Marketing: Making sure visuals used in campaigns are authentic.

- Social media: Curbing the spread of altered images.

Challenges: Despite the strength of the idea, Content Credentials deal with many challenges, including standardization, creator adoption, and legacy issues.

Comparative Analysis: Feature Matrix and Industry Impact

| Tool | Focus Area | Core Strength |

Industries Benefited |

| Nuanced | Image authenticity | AI-generated content detection | Media, Academia, Security |

| Circle to Search | Contextual search | Real-time verification | Education, Journalism |

| HealthKey | Medical summaries | NLP for healthcare | Healthcare, Insurance |

| Extracta.ai | Document processing | Structured data extraction | Legal, Finance, Enterprise |

| EyePop.ai | Visual media | No-code accessibility | Marketing, Law Enforcement |

| Content Credentials | Digital provenance | Metadata-based verification | Media, Branding, Art |

These tools have different approaches to the trust problem in digital content: verifying sources, exposing fraud, and recording edits. But they will all play a part in creating an authentic internet.

What’s Next for AI and Content Authenticity

Detection tools will become more important and be used more widely as synthetic content improves in quality. There are now even concerns relative to a new generation of more sophisticated generative AI and the need for counterbalances to this innovation. The role of detection tools will grow in scope and scale.

Trends:

- Integration of legal AI detection tools is being integrated into compliance tools to respond to regulatory demands.

- Watermarking and Fingerprinting- As AI content becomes more ubiquitous, it is also being tagged with invisible markers that detection tools can analyze.

- Social Media Moderation- Social media companies, Meta and X (formerly Twitter), are now developing and testing APIs for environmental detection tools.

Policy and regulation- a trend being seen in many regions, including European and North American, is complementary legislation proposing or passing laws that provide for disclosure when AI/ML content is generated. It is expected that detection tools will play a major role in the enforcement of compliance.

Bringing people together- Collaboration is critical: AI Developers, Regulators, academic communities, and civil society working together to create standards to protect truth while fostering innovations.

Conclusion

Artificial intelligence is changing not only the way we produce content, but it is also changing how we perceive and trust digital content as well. With more deepfakes and AI-produced media being developed daily, the time to develop mechanisms for detection and content analysis is not merely recommended, but required.

With companies like Nuanced, HealthKey, Extracta.ai, and EyePop.ai leading the way to developing a future where authenticity does not rely on chance, it would be very different. Coupled with tools like Content Credentials and Circle to Search, it has never been easier to protect ourselves and others digitally from misinformation and manipulated content.

The challenge we will face is ensuring these helpful tools will be available to our use, and that their use will be ethical and transparent. It would seem that as individuals, creators, and organizations adjust to these new circumstances, it is very clear that above all, the fight for authenticity is one of the least difficult, but perhaps most important fights of our time, and AI could serve both as a weapon and a shield in that fight.

FAQs

1. What is AI image detection?

AI image detection is the use of artificial intelligence to scan images and determine provenance, authenticity, or content. These Analysis Tools identify if the image was generated by AI or altered, through the use of pattern recognition, consuming metadata, and generative fingerprints.

2. How do AI content analysis tools work?

AI content analysis tools use machine learning, natural language processing (NLP), and deep neural networks to evaluate meaning, context, and insights from visual and text data. AI analysis tools are used to detect counterfeit content, summarize complex data, and verify the provenance of digital content.

3. Why is AI content verification needed?

With deepfakes, fake news, and AI misinformation spreading rapidly, it is impossible to trust journalism, health care, education, or digital content until we can verify the content. AI content verification allows us to limit false information and requires faithfulness to the ethical use of media.

4. Can the tools detect deepfakes?

Yes. Many tools like Nuanced and EyePop.ai have been developed to specifically help identify deep fakes or AI-altered media. The software scans for pixel-level inconsistencies, motion artifacts, and in some cases generative signatures to identify problematic content.

5. Is metadata analysis sufficient to determine an image’s authenticity?

As helpful as metadata can be as a layer of verification (i.e., timestamps, device/etc details), it can be faked. That’s why advanced tools also do pattern recognition, blockchain record veracity, and digital, watermarked identity verification, such as those under Content Credentials, for better verification.

6. Are AI detection tools for professionals only?

No. Platforms like EyePop.ai provide no-code interfaces that allow access for non-technical users from education, journalism, marketing, and students. These are built to demystify content verification.

This article highlights the growing importance of AI-based tools in combating digital misinformation, which is both timely and necessary. The focus on tools like Nuanced, Circle to Search, and Content Credentials shows how innovation is addressing the trust crisis in digital content. However, I wonder if these tools will be accessible to everyone or if they’ll remain limited to tech-savvy users or organizations. The ethical implications mentioned are crucial, but how do we ensure transparency in their use? It’s encouraging to see companies taking the lead, but will this create a dependency on specific platforms? The idea of AI as both a weapon and a shield is fascinating, but how do we balance its dual role effectively? What’s your take on the potential risks of over-reliance on these tools?

This article presents a compelling view on the role of AI in combating digital misinformation. The tools mentioned, like Nuanced and Content Credentials, seem to offer promising solutions, but I wonder how accessible they will be to the average user. Ethical considerations are crucial, and transparency in how these tools operate is a must. The idea of AI being both a weapon and a shield is particularly intriguing. How do we ensure these tools don’t become gatekeepers of truth? The focus on authenticity is undeniably important, but I’m curious about potential biases in these systems. Could they inadvertently favor certain narratives over others? Lastly, do you think these tools alone are enough, or do we need a broader societal shift in how we approach digital content? What’s your take on this balance between technology and human judgment?

Hello .!

I came across a 123 interesting page that I think you should browse.

This platform is packed with a lot of useful information that you might find valuable.

It has everything you could possibly need, so be sure to give it a visit!

https://piauihoje.com/noticias/esportes/copa-do-mundo-da-fifa-quais-equipes-se-classificaram-para-o-catar-2022-398096.html

Hello .!

I came across a 123 fantastic website that I think you should dive into.

This platform is packed with a lot of useful information that you might find valuable.

It has everything you could possibly need, so be sure to give it a visit!

https://www.cronachedellacampania.it/2022/03/strategie-di-scommesse-sul-basket/